I trained an AI to think like me — here’s what happened

Fine-Tuning a local LLM on my Obsidian for Augmented Intelligence

Could I teach a LLM to think like me?

Not just search through my notes, but reason across my ideas.

Could I create an AI clone that amplifies my thinking?

I fine-tuned a local Deepseek-R1-llama-8B on my Obsidian second brain to find out.

What I learned suggests this is just version 0.0.1 of something much bigger.

I’m driven by a mix of fascination and fear.

Fascination because AI tools are already useful (and we’ve barely scratched the surface). Fear because these same tools change what it means to be valuable.

I want to adapt to the Intelligence Age by amplifying my abilities, not surrendering them.

My goal: merge the best of what LLMs can do with the best of what my brain can do.

I want to build Augmented Intelligence.

Here’s what I’ve got so far.

How I fine-tuned a local LLM on my Obsidian.

I’ve used RAG in my second brain. Where you search through notes by meaning and add them to your prompt at query time.

It works well, but I wanted more.

Fine-tuning is when you actually alter parameters inside the model.

For my first experiment, I chose Deepseek-R1’s 1.5B distilled version — a smaller model focused on reasoning. Smaller models are quicker to tune.

The plan seemed straightforward:

Turn my second brain into a fine-tuning dataset using Llama-index.

Use LLaMA Factory to fine-tune.

Serve the model with Ollama locally.

Use the Copilot plugin in Obsidian for RAG with my fine-tuned model.

Have Augmented Intelligence and get drunk on my new power.

Instead, I discovered that teaching a LLM something new through fine-tuning is hard.

Here’s what I actually did:

Clean & chunk the data with Llama-index

Embed chunks (turn my notes into numerical representations that capture meaning of text)

Save embeddings locally

Find interesting clusters of notes (k-means, UMAP)

Summarize clusters

Write QA pairs by cluster

Fine-tune with the QA dataset

Test my fine-tuned model

Realize I needed to focus on a single concept because the fine-tuned model didn’t learn anything

Select a smaller subset of my notes

Generate more focused QA pairs on just one unique concept

Fine-tune again

Tune hyperparameters

Test again

Be satisfied that it wasn’t replying with gibberish anymore

Distilling my Second Brain into a fine-tuning dataset

To fine-tune a LLM, you need to show it examples of how you think — specifically through question and answer pairs.

But first I needed to understand my own notes.

Llama-index to embed my notes

I used llama-index to process my Obsidian vault and convert my notes into embeddings.

After updating their Obsidian Reader to handle links and backlinks I was able to create richer embeddings. Storing the link information enables GraphRAG too.

Processing ~2k notes with OpenAI’s embedding model took 40 seconds and 8 cents.

Finding clusters of meaning

With my notes embedded, I could explore them by meaning rather than just links. What patterns would emerge? What concepts could I teach the LLM?

I approached this like a recent data pruning project where I’d used k-means clustering to improve model accuracy on MNIST.

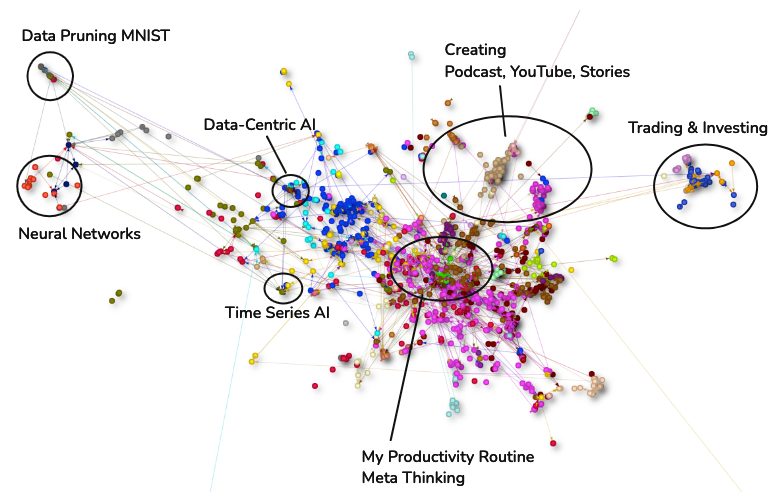

Here, I created 50 distinct groups and used UMAP to visualize them in 2D space. Unlike Obsidian’s graph view which shows direct links, this revealed how my ideas naturally clustered by meaning.

I really enjoyed exploring this part and I think there’s more to do here.

Some interesting observations

Right: Finding your Niche (your specialized, unique knowledge) is closely related to balancing money and happiness. Cool to see that next to Joyspan (my personal philosophy on life).

Bottom right: Storytelling was heavily used in my StaffPlus Talk.

Top Right: Comedy Writing are the random funny stories I’ll use for my standup routine when I’m 50, most of them have to do with my Kids. Interesting to see this near My Podcast and YouTube.

Bottom Left: Notes about PKM appearing right next to my personal Obsidian tips. Checks out.

Left: A mix of AI learning, AI projects, and how I think about adapting to AI as a data engineer.

Selecting clusters for fine-tuning

For my first fine-tuning attempt, I selected the 20 largest clusters.

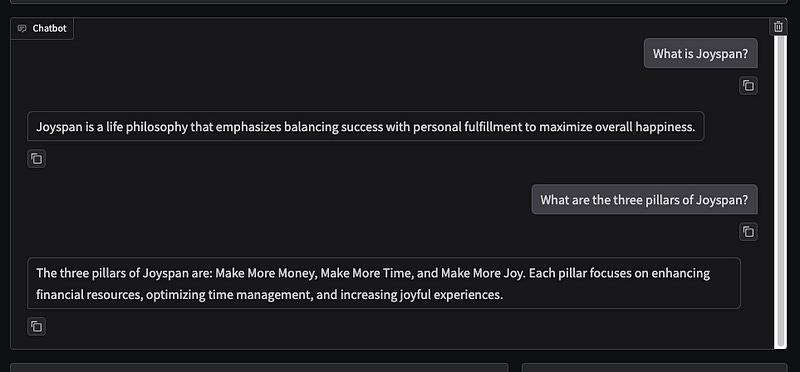

When this proved too noisy, I narrowed my focus to a single concept I’d developed: Joyspan — my philosophy of optimizing life for moments of joy through strategic balancing of money, time, and relationships.

Building the fine-tuning dataset

With my focus narrowed to Joyspan, I needed to create QA pairs that would teach the LLM how I think about this concept.

I used llama-index to retrieve my top 10 Joyspan-related notes, then had gpt-4o-mini generate teaching examples from each note:

for note in joyspan_notes:

# Instruct the model to generate, 5 QA pairs

prompt = (

"You are an expert knowledge curator and teacher. Below is a text passage that represents the concept of Joyspan. "

"Your task is to distill the core concepts, connections, and insights from the text and generate 5 diverse, high-quality question–answer pairs that a curious student might ask. "

"Ensure that the questions probe important ideas, and the answers are concise, clear, and directly based on the text. "

"All questions should be centered around the concept of Joyspan. "

"Please output your answer as valid JSON in the following format:\n\n"

'[{{"question": "<your question>", "answer": "<the answer>"}}, …]\n\n'

"Text:\n'''{}'''".format(note.text)

)

I now had a JSON formatted set of 50 QA pairs for fine-tuning that looked like this:

[

{

"instruction": "What is Joyspan and how does it relate to work-life balance?",

"input": "",

"output": "Joyspan is a framework for achieving work-life balance by focusing on time spent enjoying activities that bring happiness, guiding individuals toward success and fulfillment in both their careers and personal lives."

},

{

"instruction": "What does the Joyspan equation represent?",

"input": "",

"output": "The Joyspan equation represents the sum of moments spent in a flow state, which are activities that bring joy and make individuals lose track of time, emphasizing the importance of collecting joyous moments throughout life."

},

{

"instruction": "How can one increase their Joyspan according to the text?",

"input": "",

"output": "To increase Joyspan, individuals can seek more activities and time for joyful moments through career growth, passive investment strategies, hiring help, upgrading tools, or quitting unfulfilling jobs."

},

…

]Fine-tuning Deepseek on my Second Brain

After preparing my dataset, I started fine-tuning with DeepSeek’s 1.5B model using LLama-factory on Google Colab.

The results were gibberish.

I upgraded to an A100 GPU ($10) to handle the 8B parameter model, hoping its larger capacity could better grasp my concept. The faster GPU was a great call — when experimenting, speed of iteration is everything.

My first attempts with the 8B model still failed spectacularly:

This was when I abandoned my broad dataset from the 20 clusters and focused purely on Joyspan.

But even then, success wasn’t immediate.

After experimenting with hyperparameters — increasing the LoRA rank and adding epochs — I finally got meaningful results:

When it worked, I ran upstairs shouting “I’ve tamed the robot!” My wife and kids responded with their usual dad-spends-too-much-time-working-from-home enthusiasm by not looking up from breakfast.

I decided to call it a win and stop for now.

Was Fine-Tuning Worth It?

Not really.

At least, not yet.

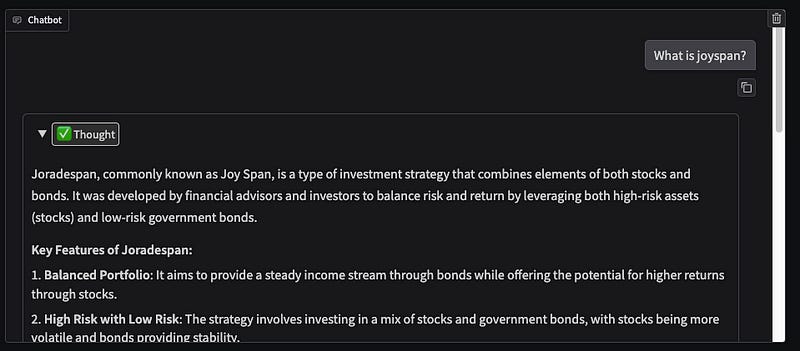

I learned a ton and have even more questions than when I started. But I won’t be using a fine-tuned model in my second brain. The core challenge is trust and evaluation.

Did I actually teach it Joyspan, or did it just overfit to my examples?

What’s the value of a model that knows one concept really well?

Yet the idea of teaching LLMs new concepts through fine-tuning is fascinating.

Could this be a path to more efficient learning on less data? Or am I about to learn the bitter lesson again — that simple scaling usually wins?

Where This Research Leads

This experiment opened three promising directions:

“Concept notes” using hierarchical summarization to distill and de-duplicate my second brain.

Custom AI agents — fine-tuned models that could work on specific tasks on your behalf. Mixture of experts on different clusters?

RAG for time-series data — building the tool I wish I had as a day trader (not directly related, but to continue exploring data distillation and efficient retrieval of embeddings).

The summarization approach feels most immediately valuable. But first, let me share what actually works in my current Augmented Intelligence setup.

How I Actually Use AI in my Second Brain

Despite my fine-tuning experiment, my current setup is surprisingly simple.

I use two main AI tools in my second brain:

Smart Connections for local RAG-based search

Smart Context for note distillation.

Smart Connections helps me find relevant notes fast. It’s not fine-tuned but it embeds my notes locally. This helps me find connections across my second brain, searching by meaning.

Smart Context is where things get interesting. It can grab all the text from a Map of Content and its linked notes, letting me dump interconnected ideas into Claude or ChatGPT. This helps distill my messy web of thoughts into clearer concept notes. It’s a manual process, but works well.

This fits my “Augment, Stay Human” philosophy — AI tools shouldn’t replace thinking, but they can help us process information faster.

The Augmented Intelligence Framework

Going beyond just Obsidian, here’s how I think about using AI tools for augmentation.

One rule:

Machines generate, humans curate.

For Learning

AI enables faster information processing. It’s a distiller, a search engine. But you need to know what to ask — and be specific.

My framework is simple:

Follow your curiosity to a specific problem

Break that problem into smaller, focused parts (reasoning models can help here)

Use AI to gather and process information (search & summarize)

You apply the knowledge by doing the thing

Repeat

You curate the question, LLM generates from data (it’s trained on or injected via RAG).

For Building

AI is powerful for implementation once you’ve defined your problem clearly. Most challenges aren’t truly novel — they’re new combinations of existing patterns (e.g. steal like an artist).

LLMs can help pattern match your well defined problem to known solutions, saving you time.

I’ve found success with an evaluation-first approach:

Define what success looks like (I’ve rediscovered test driven development)

Break the problem into testable pieces

Let AI implement solutions

Validate against your definition of success from step 1

You curate the problem definition & desired output, LLM generates the solution.

For Ideation

When I’m stuck or want a different perspective, I’ll brain dump my problem (the writing itself often brings clarity), then use AI to:

Generate multiple sets of possibilities

Reframe the problem from different angles

Ask “what am I missing” or “what are common solutions I haven’t considered”.

The LLM gives you opportunities for a light bulb moment — your job is to recognize which ideas resonate and why.

The LLM generates ideas, you curate what’s useful.

The Future of Augmented Intelligence

I believe this fine-tuning experiment is version 0.0.1 of something much bigger.

Here’s what I see coming

First, capture will become seamless.

Meta’s Rayban glasses and augmented reality will turn our daily experiences into data. No need to manually take notes in Obsidian.

Your life becomes an endless stream to capture.

Then, we’ll solve storage and retrieval.

Using data-centric AI, we’ll store and access information more efficiently. My work in data pruning has shown that cleaner, richer data can improve model results while being more cost-efficient to train.

Finally, local, smaller models will enable truly personal augmentation.

We’re already seeing this with Deepseek — powerful models that are small. With Nvidia releasing a $3k supercomputer this spring — personal AI is becoming reality.

Let’s build Augmented Intelligence together

This fine-tuning project was my first glimpse of what I ultimately want: a way to augment my imperfect human mind, enabling me to learn faster, build better, and amplify my unique ideas.

Until then,

Augment, Stay Human.

Thanks for reading!

All code is available on my GitHub.

I’m a Staff Data Engineer on an Applied AI Research team building time series foundation models.

Video of this project:

❤️Love it! Get your Daily Blogs going on GitHub! Your content is 🔥🔥🔥🤩 You got this Brother! 🤗